Ouster: Building The Interfaces Between AI And The Physical World

Ouster is positioned to provide essential perception interfaces that allow AI to act within the physical world, as digital LiDAR might become a primary sensory layer for autonomous systems.

Disclosure: The author holds a beneficial long position in Ouster, Inc. (NASDAQ: OUST). This article is provided for informational and entertainment purposes only and does not constitute financial advice. The views expressed here represent the author’s personal opinion. The author receives no compensation for this article and has no business relationship with the company mentioned. Please see the full "Legal Information and Disclosures" section below.

While we are still adjusting to the wonders of generative AI, the next technological revolution is already dawning: intelligent robots capable of acting within and interacting with the physical world. The essential foundation for this "physical AI" is perception. For over a decade, Elon Musk has argued that a vision-based approach to autonomous driving is sufficient. This is based on the logic that if humans rely primarily on vision to navigate, machines should be able to do the same.

Musk may be correct in theory; vision-based autonomous vehicles might already be safer than those driven by people. However, the question remains: are they safe enough? Fatal accidents involving autonomous vehicles receive significantly more scrutiny than those caused by humans because we are used to the dangers of human driving, but not to being endangered by autonomous machines. Thus, simply being "safer than a human" might be insufficient. To achieve widespread adoption and trust, we must strive for near-perfect safety.

At the same time, relying on vision makes systems susceptible to manipulation. Researchers have demonstrated that autonomous vehicles can be deceived by phantom objects, such as pedestrians projected onto a street, triggering vehicles to brake for obstacles that do not exist. Potential attackers could use invisible light patterns to trick a vision-based system, forcing it to misread signs that have not been physically touched. Even subtly modified stickers might disrupt vision-based perception and cause a stop sign to appear as a speed limit, for example.

A far more significant challenge arises simply from the natural environment itself, as passive dependence on light causes failure in blinding sun glare, sudden transitions to darkness, or low-contrast fog. While sensor fusion mitigates this risk, a camera-first architecture inherently interprets light rather than verifying actual physical presence.

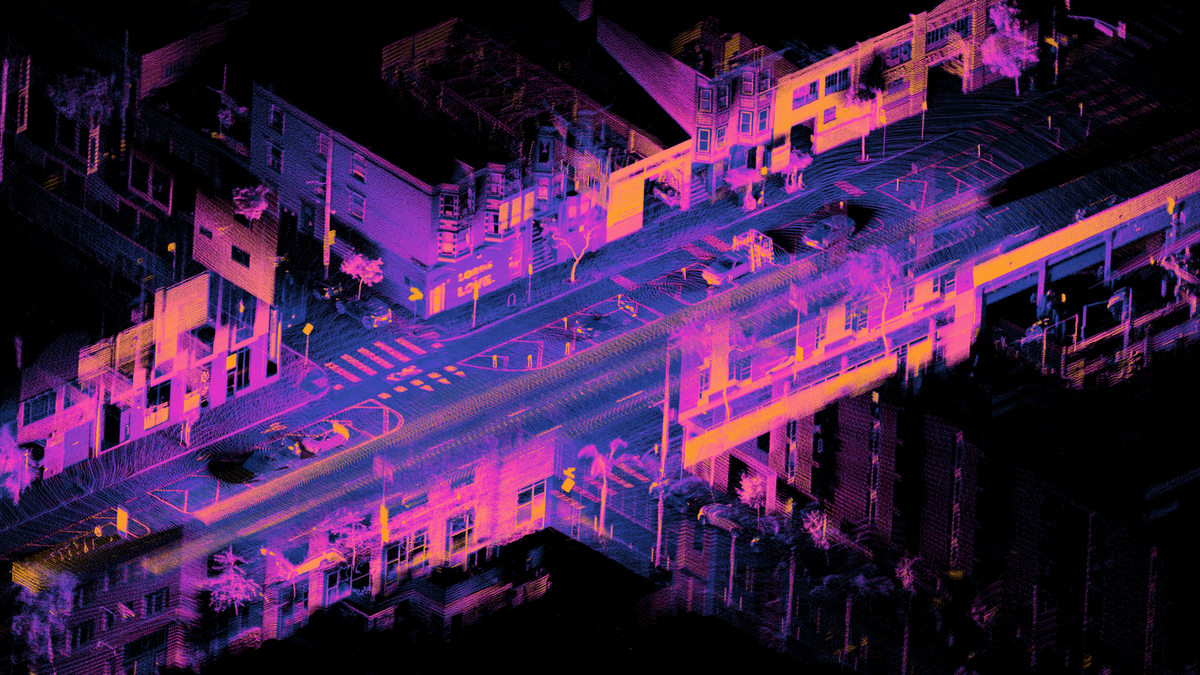

However, there is a compelling alternative to cameras for perceiving the environment: LiDAR (Light Detection and Ranging). While cameras must infer a three-dimensional world from two-dimensional imaging, LiDAR systems can map the space around them by measuring the exact distance to every surface point. So, while relying solely on cheap cameras is certainly economically appealing, this limitation is difficult to justify given the existence of technology like LiDAR.

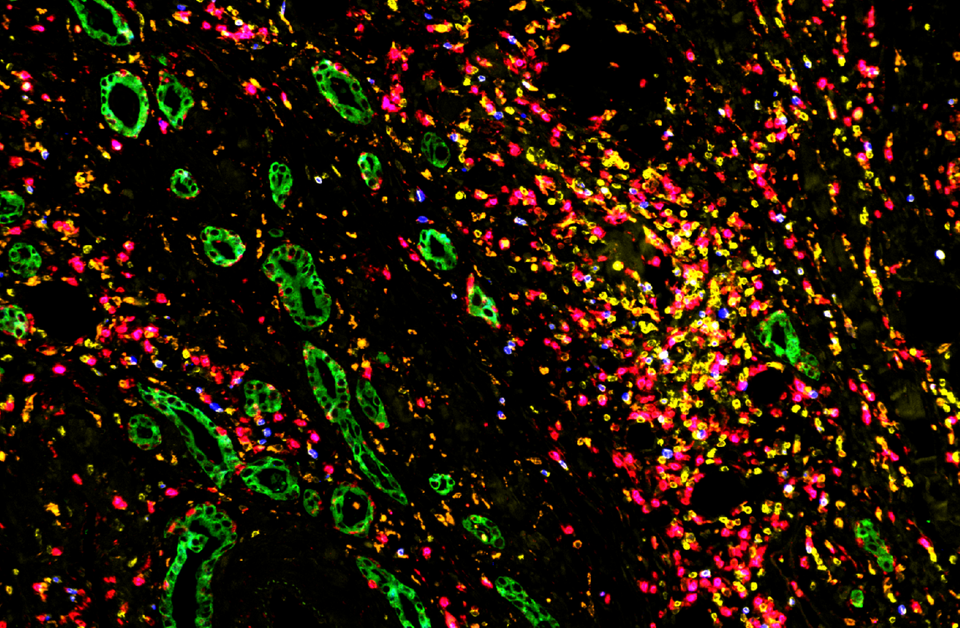

Unlike cameras, LiDAR is an active sensing technology. It operates by emitting rapid pulses of laser light, often millions per second, and measuring the precise time it takes for each pulse to return to the sensor. This time-of-flight calculation generates a point cloud, which is a dense and three-dimensional map of the environment providing exact distance measurements. The primary advantage of LiDAR is its ability to provide absolute depth data regardless of lighting conditions. Furthermore, because the data is geometric rather than visual, it inherently protects individual privacy by tracking movement without capturing facial features. Historically, however, the technology was hampered by significant disadvantages. Early systems were mechanically complex, prone to failure from vibration, and prohibitively expensive for mass deployment.

San Francisco-based company Ouster (NASDAQ: OUST) transformed the landscape by inventing digital LiDAR in 2015. Traditional LiDAR systems rely on hundreds or even thousands of discrete components, including manually aligned laser emitters and detectors. This analog approach is inherently complex, expensive to manufacture, and difficult to scale. Ouster replaced this labyrinth of discrete parts with two semiconductor chips: a vertical-cavity surface-emitting laser (VCSEL) array and a single-photon avalanche diode (SPAD) detector array. This architecture allows the company to leverage the scalability of Moore’s Law. Just as digital cameras have evolved remarkably with every generation over the last two decades, Ouster’s digital approach enables exponential improvements in resolution, range, and reliability with each new generation of chips, all while driving down costs.

The company’s REV7 sensor family is powered by the proprietary L3 chip, a backside-illuminated SPAD detector that counts individual photons with remarkable efficiency. By processing over ten trillion photons per second, the L3 chip provides the REV7 sensors with double the range and ten times the sensitivity of previous generations. This performance leap demonstrates that LiDAR progress has evolved into a semiconductor challenge rather than an optical one. This digital architecture powers Ouster’s diverse product lineup, including the ultra-wide field-of-view OS0 for short-range navigation, the mid-range OS1 which serves as the industry workhorse, and the long-range OS2 capable of detecting objects from over 400 meters away.

Expanding beyond spinning sensors, Ouster entered the automotive sector with its DF (Digital Flash) series. These sensors utilize a flash architecture to illuminate the entire field of view simultaneously, allowing the device to capture data while remaining entirely stationary. By eliminating mechanical wear and vibration, the DF series offers the durability and compact form factor required for mass-market integration. At its core is the automotive-grade Chronos chip, which integrates a SPAD detector array and digital signal processing onto a single piece of silicon. Meeting rigorous ASIL-B functional safety standards, this system delivers high-resolution 3D data for Level 2 through Level 5 autonomous driving and can be embedded directly into the vehicle body.

Ouster’s business model targets four distinct verticals: automotive, industrial, robotics, and smart infrastructure. This diversification creates a resilient revenue stream, as evidenced by the company's record third quarter 2025 results, where quarterly revenue rose impressively by 41% year-over-year to reach $39.5 million. In early 2023, Ouster executed a strategic merger with Velodyne, the pioneer behind the original spinning LiDAR. This union integrated Velodyne’s extensive global customer base with Ouster’s digital scalability, creating a combined portfolio of over 850 patents and applications.

By outsourcing production to partners in Thailand, specifically Benchmark Electronics and Fabrinet, the company maintains an asset-light model that allows for rapid scaling with low capital expenditure. This manufacturing maturity drove an increase in GAAP gross margin to 42% from 38% year over year.

A key pillar of Ouster’s value proposition is its software ecosystem, particularly the Gemini perception platform and BlueCity. These tools transform raw point cloud data into actionable intelligence, allowing customers to detect, classify, and track objects without developing their own complex algorithms. In smart infrastructure applications, such as traffic management and crowd analytics, Gemini enables cities to monitor intersections and public spaces with centimeter-level accuracy. Unlike camera-based systems that struggle with low light and privacy concerns, Ouster’s LiDAR provides anonymized 3D tracking that functions perfectly in total darkness. This capability has led to widespread adoption in intelligent transportation systems, where accurate data on pedestrian and vehicle movements is crucial for improving safety and reducing congestion.

The industrial automation sector has also embraced Ouster’s technology to power a new generation of autonomous mobile robots and heavy machinery. In warehouses and logistics centers, autonomous forklifts navigate complex environments with high precision. Third Wave Automation, for instance, utilizes up to four OS0 sensors per vehicle to achieve reliable object detection and mapping. Similarly, the mining industry has integrated these sensors into massive haul trucks and excavators. Manufacturers like Komatsu and Waytous rely on Ouster’s rugged design to maintain autonomous operations in harsh conditions where dust, vibration, and extreme temperatures would incapacitate less resilient sensors.

One of the most compelling applications for Ouster’s technology is in unmanned aerial vehicles, where LiDAR provides the omnidirectional awareness necessary for obstacle avoidance and simultaneous localization and mapping (SLAM). This capability is vital for navigating complex, GPS-denied environments. Consequently, LiDAR-equipped drones are transforming industries ranging from forestry to infrastructure inspection. The primary advantage here is the sensor's exceptional size-to-performance ratio. The OS1, for instance, weighs less than 500 grams yet delivers the resolution required for high-speed mapping. This lightweight design directly extends flight times and boosts operational efficiency. A prime example is Flyability's Elios 3 drone, which leverages an OS0 sensor to generate centimeter-accurate 3D maps of dangerous, confined spaces like mines and sewers.

Ouster’s digital LiDAR was approved by the Department of Defense in June 2025 for use in unmanned systems. This milestone confirms that the technology meets the rigorous standards required for high-stakes government applications. This verified security establishes a strategic moat against its main competitor, the Chinese industry leader Hesai Technology. Hesai has excelled in scale, surpassing two million cumulative deliveries by late 2025 and reporting $112 million in revenue for the third quarter alone. While Hesai currently leads in mass-market automotive volume, Ouster maintains a unique advantage in critical infrastructure and defense through its Western-aligned supply chain and digital security.

The geopolitical landscape creates significant hurdles for Hesai, notably its placement on the Department of Defense blacklist of Chinese military companies. This designation was upheld by a U.S. court in July 2025, reinforcing national security concerns regarding Chinese sensors in critical infrastructure. Furthermore, a bill proposed in December 2025 seeks to phase out Chinese-made sensors from self-driving cars and ports within three years. By combining its technical moat with a Western-aligned supply chain, Ouster positions itself as the secure alternative for operators mitigating the risks of Chinese technology.

Looking toward the future, Ouster positions itself as a foundational layer for the physical AI revolution. As AI models evolve, they require not only massive datasets for training but also real-time perception for inference. Ouster’s sensors fulfill both needs by providing ground-truth data to learn and precise inputs to act in the physical world. Whether for autonomous taxis, industrial drones, or smart infrastructure, the underlying requirement is reliable sensing. Ouster addresses this through a digital architecture that leverages semiconductor economics to build a competitive moat. The company’s roadmap centers on the upcoming L4 chip, which promises major performance gains. Ultimately, Ouster is building essential interfaces that enable AI to perceive and act within the physical world.

Follow me on X for frequent updates (@chaotropy).

Legal Information and Disclosures

General Disclaimer & No Financial Advice: The content of this article is for informational, educational, and entertainment purposes only. It represents the personal opinions of the author as of the date of publication and may change without notice. The author is not a registered investment advisor or financial analyst. This content is not intended to be, and shall not be construed as, financial, legal, tax, or investment advice. It does not constitute a personal recommendation or an assessment of suitability for any specific investor. Readers should conduct their own independent due diligence and consult with a certified financial professional before making any investment decisions.

Accuracy and Third-Party Data: Economic trends, technological specifications, and performance metrics referenced in this article are sourced from independent third parties. While the author believes these sources to be reliable, the completeness, timeliness, or correctness of this data cannot be guaranteed. The author assumes no liability for errors, omissions, or the results obtained from the use of this information.

Disclosure of Interest: The author holds a beneficial long position in the securities of Ouster, Inc. (NASDAQ: OUST). The author reserves the right to buy or sell these securities at any time without further notice. The author receives no direct compensation for the production of this content and maintains no business relationship with the companies mentioned.

Forward-Looking Statements & Risk: This article contains forward-looking statements regarding product adoption, technological trends, and market potential. These statements are predictions based on current expectations and are subject to significant risks and uncertainties. Investing in technology and growth stocks is speculative, subject to rapid change and competition, and involves a risk of loss. Past performance is not indicative of future results.

Copyright: All content is the property of the author. This article may not be copied, reproduced, or published, in whole or in part, without the author's prior written consent.